Decision Tree

Decision tree learning is one of the most widely adopted algorithms for classification. As the name indicates, it builds a model in the form of a tree structure. Its grouping exactness is focused with different strategies, and it is exceptionally productive.

A decision tree is used for multi-dimensional analysis with multiple classes. It is characterized by fast execution time and ease in the interpretation of the rules the goal of decision tree learning is to create a model (based on the past data called past vector) that predicts the value of the output variable based on the input variables in the feature vector. Each node (or decision node) of a decision tree corresponds to one of the feature vectors. From every node, there are edges to children, wherein there is an edge for each of the possible values (or range of values) of the feature associated with the node. The tree terminates at different leaf nodes (or terminal nodes) where each leaf node represents a possible value for the output variable. The output variable is determined by following a path that starts at the root and is guided by the values of the input variables.

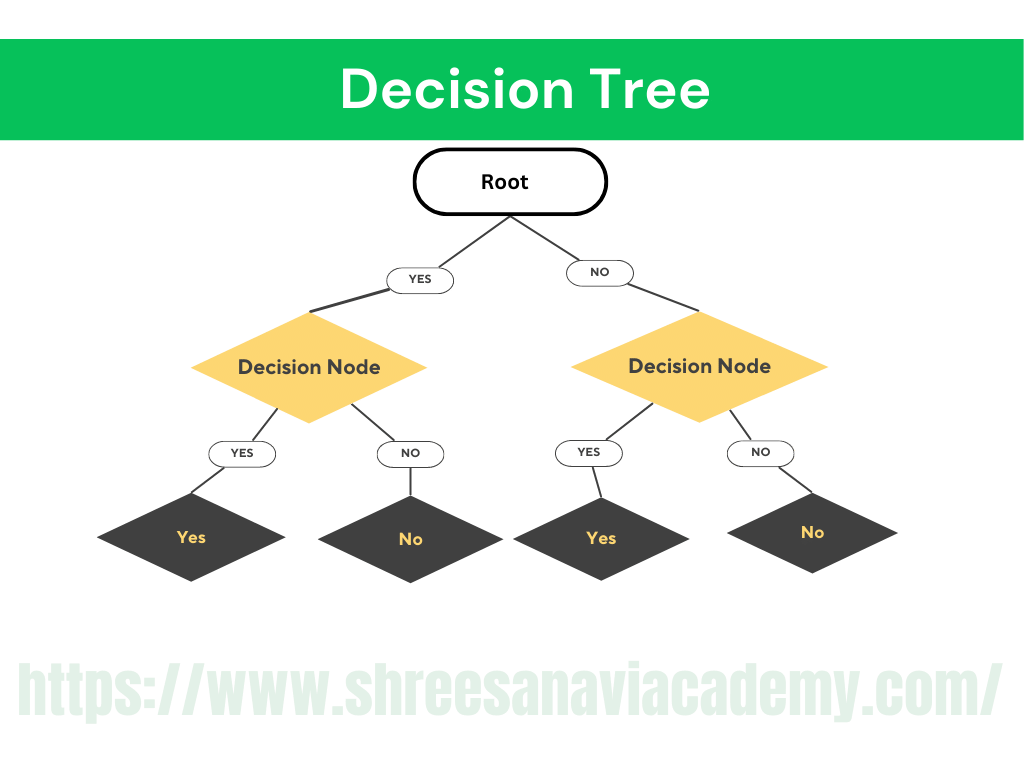

Each internal node tests an attribute (represented as Root and Decision Node). Each branch corresponds to an attribute value (Yes/No) in the above case. Each leaf node assigns a classification. The first node is called as 'Root' Node. Branches from the root node are called as 'Leaf' Nodes where ' Root ' is the Root Node (first node). ' Decision Node ' is the Branch Node. 'Yes' & 'No' are Leaf Nodes.

Thus, a decision tree consists of three types of nodes:

- Root Node

- Branch Node

- Leaf Node

Advantage of Decision Tree

-

It generates rules that are very easy to understand. To understand this model, little computational and mathematical expertise is needed for smaller trees.

-

Works well for most of the problems.

-

It can handle both numerical and categorical variables.

-

Can work well both with small and large training data sets.

-

Decision trees provide a definite clue of which features are more useful for classification.

Disadvantages of Decision Tree

-

Decision tree models frequently favour features with a greater variety of levels, or possible values.

-

This model gets overfitted or underfitted quite easily.

-

In classification problems with numerous classes and a limited number of training examples, decision trees are prone to errors.

-

A decision tree can be computationally expensive to train.

-

Large trees are complex to understand.